LLM Course | Chat

Previous messages

10 August 2024

f

Р

L

13:41

LLM Course | Chat

In reply to this message

Спасибо за ОС. Но у нас курс более вводный. Даём базу, и основные

подходы, чтобы потом уже можно было уходить в тонкости при

необходимости.

f

13:42

février

In reply to this message

В таком случае, я считаю базой если человек способен построить проект

который пойдет в прод. Поэтому можно делать также разборы реальных

проектов

NT

13:44

Nikita Tenishev

In reply to this message

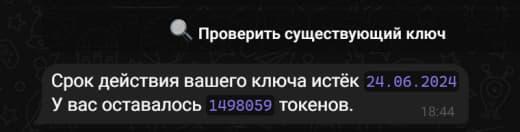

Можно докупить токены, 1 млн токенов за 500 руб, перевести на карту

Алерону и после я обновлю ключ. Если интересно, то ко мне в лс

Р

11 August 2024

L

12:16

LLM Course | Chat

?

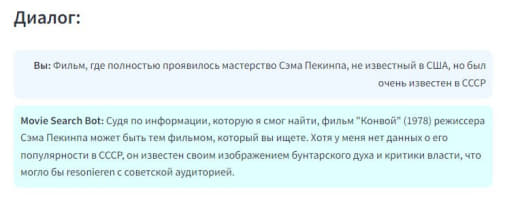

🤖 AI Practice | LLM | ChatGPT | GenAI 11.08.2024

12:10:54

In reply to this

message

🙀Смотрите на

как же мощны лапищи студентов нашего курса! Это топ-25.

Нагоняйте скорее!

В конце августа устроим розыгрыш мерча среди тех кто дошел до сертификата и тех кто сдал проекты. Успевайте попасть в число чемпионов нашего курса!

А всем студентам из списка огромный респект за настойчивость и спасибо что вы с нами! 🫡

В конце августа устроим розыгрыш мерча среди тех кто дошел до сертификата и тех кто сдал проекты. Успевайте попасть в число чемпионов нашего курса!

А всем студентам из списка огромный респект за настойчивость и спасибо что вы с нами! 🫡

С

12:45

Сергей

In reply to this message

Привет. Продам бота 10000руб https://t.me/Ero_style_bot.

800+ юзеров статистика ТГ, в базе около 100, активных юзеров в среднем 2-3 в день, привлекает в тг канала в среднем 1-2 юзера в день, встроена подписка.

Есть посты в некоторых каналах.

800+ юзеров статистика ТГ, в базе около 100, активных юзеров в среднем 2-3 в день, привлекает в тг канала в среднем 1-2 юзера в день, встроена подписка.

Есть посты в некоторых каналах.

14 August 2024

S

14:55

Stantinko

In reply to this message

Всем привет.

Подскажите с проблемкой. Попытался в главе 4 (Агенты) заменить модель курса на другую (не OpenAI), вроде все работает, но агент впадает в цикл (Final Answer верный, но сразу после него идут строки Invalid or incomplete response, как будто агент не понимает, что выдал нормальный ответ, и работает заново).

Кто-нибудь сталкивался с таким?

Подскажите с проблемкой. Попытался в главе 4 (Агенты) заменить модель курса на другую (не OpenAI), вроде все работает, но агент впадает в цикл (Final Answer верный, но сразу после него идут строки Invalid or incomplete response, как будто агент не понимает, что выдал нормальный ответ, и работает заново).

Кто-нибудь сталкивался с таким?

k

16:16

khmelkoff

In reply to this message

Пробовал давно. Плохо работает. Проблемы две - промпт внутри (был)

заточен под OpenAI, и модель обязательно должна быть instructable.

mistral например в питоновском агенте любит лишние скобки ставить в

код, из-за этого естественно не работает.

<

17:05

<<R>>

In reply to this message

Добрый вечер, закончился срок действия ключа, подскажите, как я могу

его продлить, чтобы закончить курс?

15 August 2024

TM

07:35

Timur Makhmutov

In reply to this message

Всем привет!

У меня одного на стпекие видосы не грузятся?

У меня одного на стпекие видосы не грузятся?

k

L

13:31

LLM Course | Chat

In reply to this message

Вот рабочий метод как чинится ютуб быстро на любой системе: https://www.youtube.com/watch?v=Dvu2SUB8LvU&t=393s

(ссылка на ютуб 😂)

Отлично работает, сам пользуюсь - быстро и удобно.

Отлично работает, сам пользуюсь - быстро и удобно.

ДТ

19:31

Дима Ткачук

In reply to this message

Добрый вечер, у меня закончился срок действия ключа, как мне запросить

новый?

NT

19:35

Nikita Tenishev

In reply to this message

Добрый вечер, попробуйте снова в боте проверить, я обновил

16 August 2024

К

17 August 2024

L

12:12

LLM Course | Chat

?

🤖 AI Practice | LLM | ChatGPT | GenAI 17.08.2024

07:59:01

In reply to this

message

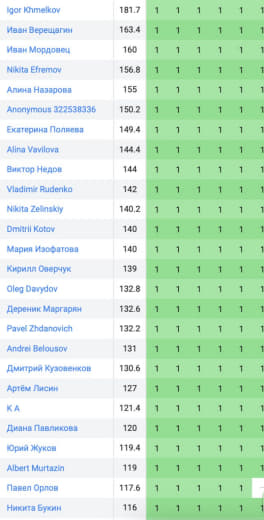

Доброе утро, LLM

оптимисты!

Небольшое обновление курса: в уроке про "Память в LangChain" появилась задача для закрепления навыков, про которую давно спрашивали!

Го решать!🏃♂️🏃🏃♀️

Небольшое обновление курса: в уроке про "Память в LangChain" появилась задача для закрепления навыков, про которую давно спрашивали!

Го решать!🏃♂️🏃🏃♀️

AG

15:34

Artem Gruzdov

In reply to this message

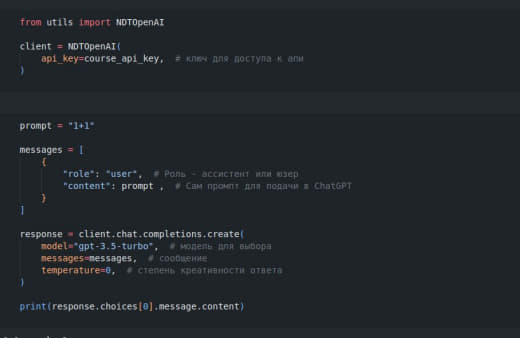

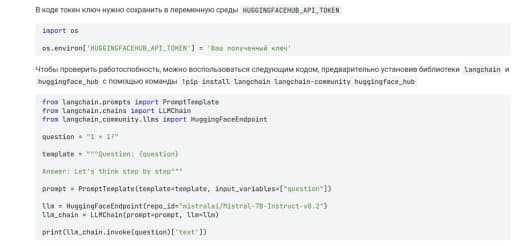

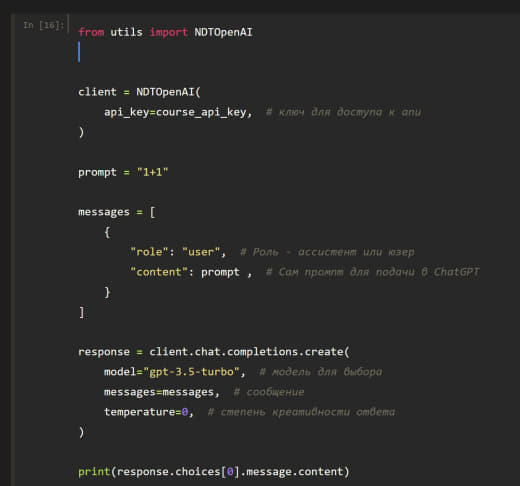

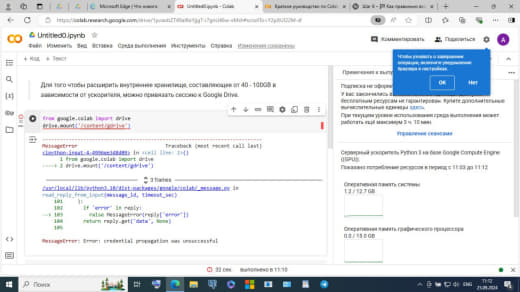

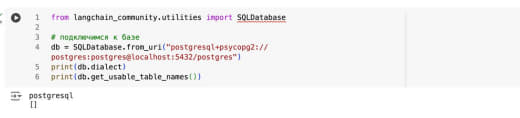

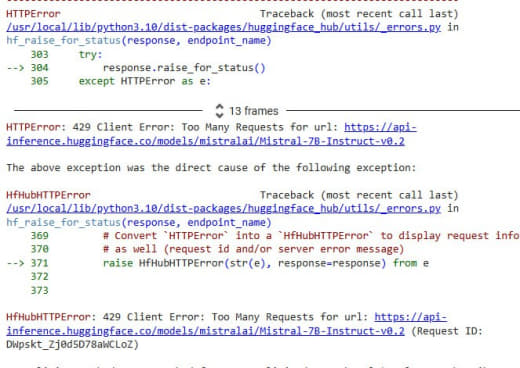

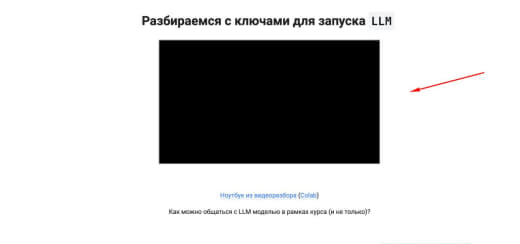

Вернулся к курсу, бот обновил ключ, вот такой код из курса не работает

с включенным ВПНом

15:35

In reply to this message

Ошибка AuthenticationError: Error code: 401 - {'error':

{'message': 'Your authentication token is not from a

valid issuer.', 'type':

'invalid_request_error', 'param': None,

'code': 'invalid_issuer'}}

TM

15:38

Timur Makhmutov

In reply to this message

Я могу ошибаться, но вроде как ключ на библиотеки openai не

распространяется

AG

TM

15:42

Timur Makhmutov

In reply to this message

Но там есть альтернативный код, он с ключом от бота работать должен

AG

TM

15:42

Timur Makhmutov

In reply to this message

Суть там не меняется, модели даже используют те же самые )

AG

15:43

Artem Gruzdov

In reply to this message

да я знаю, просто это прокладка, которая в один день может перестать

работать

TM

15:44

Timur Makhmutov

In reply to this message

Ну тогда выход один - симка иностранного государства, ВПН, карта, с

которой можно оплатить токены

AG

15:48

Artem Gruzdov

In reply to this message

ага, у меня даже есть рабочий токен, думал этот израсходовать

19 August 2024

К

12:22

Камила

In reply to this message

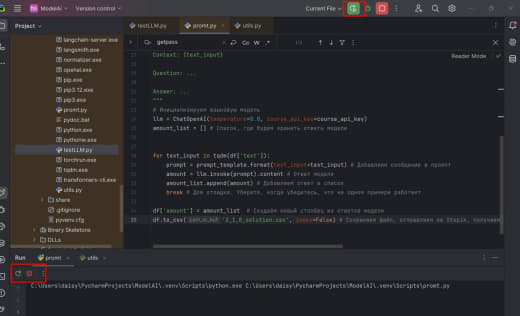

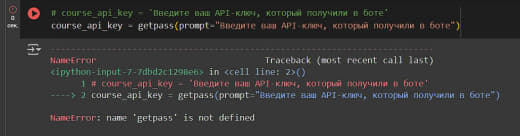

Подскажите, почему код со скрина не может запуститься и переходит в

режим time-out? IDE Pycharm

М

L

19:58

LLM Course | Chat

In reply to this message

Там внизу появляются кнопки с действиями. Он не понимает ответы в чате.

М

М

21:07

Максим

In reply to this message

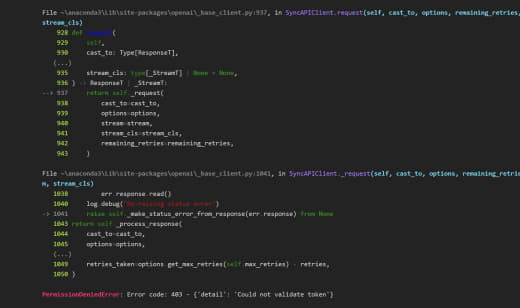

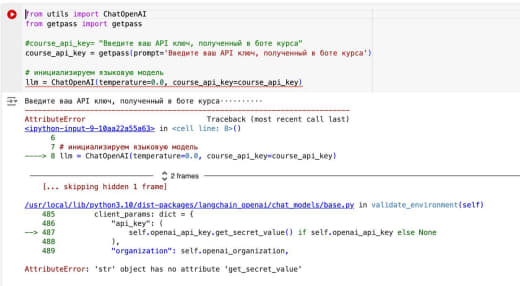

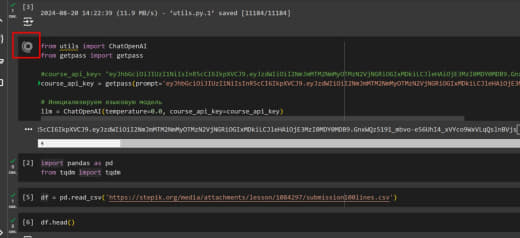

Не понимаю, в чем ошибка. Пытаюсь запустить команду, в итоге кружок

крутится и ничего не происходит. В чем может быть проблема?

k

21:25

khmelkoff

In reply to this message

вот это длинненькое из латинских букв и цифр скопируйте в поле для

ввода и нажмите энтр. вообще getpass собственно нужен чтобы никому ваш

ключ не показывать ))

20 August 2024

К

16:31

Камила

In reply to this message

Подскажите, почему этот блок кода не выполняется? В чем может быть

причина?

k

16:45

khmelkoff

In reply to this message

То же что и в предыдущем случае. Блок ждет пользовательского ввода. Это

как input() а prompt это в данном случае подсказка, туда нужно что-то

вроде "Введите ключ", ключ вводите в поле для ввода.

К

16:54

Камила

In reply to this message

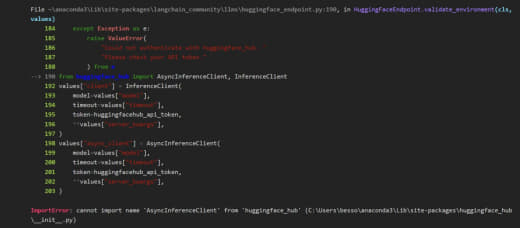

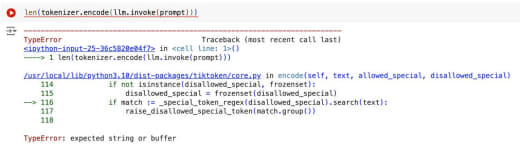

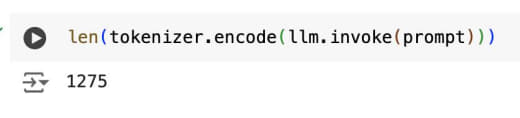

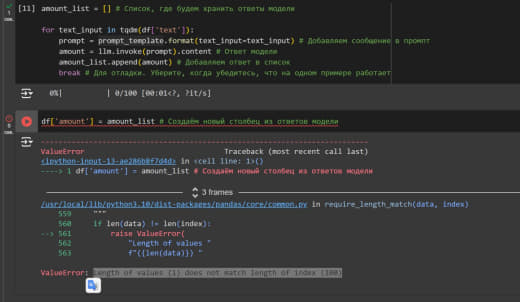

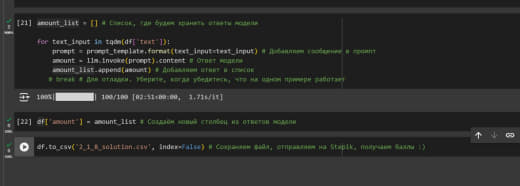

Спасибо! Получилось. Теперь падаю на этом. Не подскажите что здесь не

так?

k

16:58

khmelkoff

In reply to this message

в верхнем блоке у вас в пустой список добавляется одно значение, в

нижнем вы пытаетесь записать его в столбец датафрейма, в котором 100

кажется строк. Там же написано что break для тестирования, потом

убрать.

К

17:10

Камила

In reply to this message

Спасибо, получилось! А как теперь посмотреть и отправить этот файл?

17:25

In reply to this message

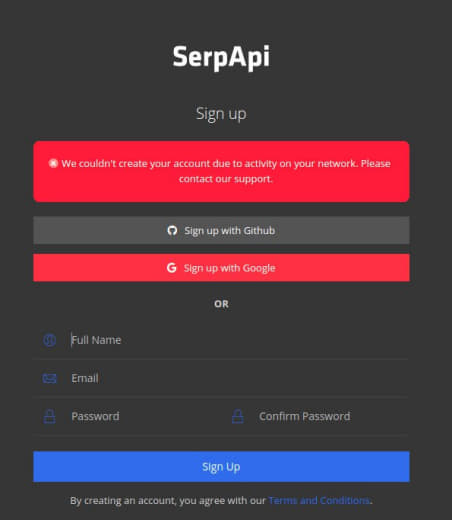

А не подскажите, что делать если заблокировали учетную запись на

OpenAI?

k

17:27

khmelkoff

In reply to this message

а как вы поняли, что запись заблокирована? они вам письмо прислали?

К

17:38

Камила

In reply to this message

При заходе на страницу регистрации выходит ошибка 403 и сообщение

"Извините, вы заблокированы"

k

К

17:55

Камила

In reply to this message

Раньше регистрировала УЗ. Аpi ключ получила по иностранному номеру

(активировала через интернет). А потом заблокировали

TM

19:03

Timur Makhmutov

In reply to this message

Ребята, а подскажите пожалуйста, хороший и проверенный VPN и

проверенный сайт, где иностранный номер можно поучить для регистрации

учетной записи к ChatGPT

Варианты, конечно, нагуглить можно, но хотелось бы из первых рук, так сказать, информацию получить)

Варианты, конечно, нагуглить можно, но хотелось бы из первых рук, так сказать, информацию получить)

К

19:12

Камила

In reply to this message

Мне этот посоветовали: https://1grizzlysms.com/blog/kupit-nomer-dla-chat-gpt-bystro-i-nedorogo

21 August 2024

TM

14:11

Timur Makhmutov

In reply to this message

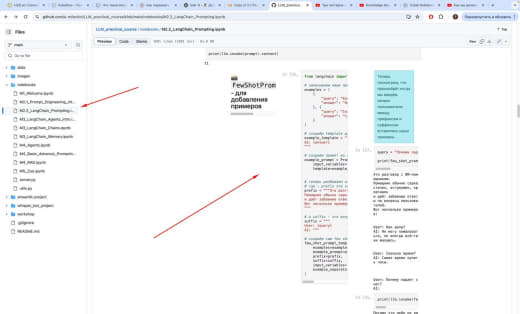

ребят, по-моему у вас верстка поехала на этой страничке

L

14:37

LLM Course | Chat

In reply to this message

Это гитхаб иногда плохо рендерит. Смотрите локально или в колабе

DA

NT

21:03

Nikita Tenishev

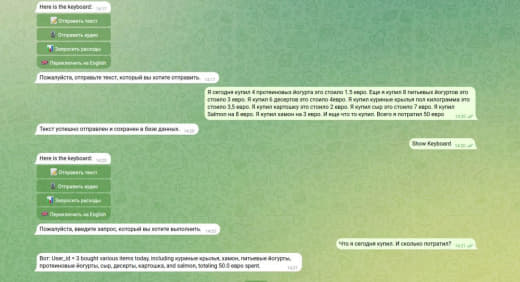

In reply to this message

Возможно, что не открылась клавиатура с кнопками в боте, покажите скрин

диалога

DA

L

DA

22 August 2024

ИБ

09:51

Илья Березуцкий

In reply to this message

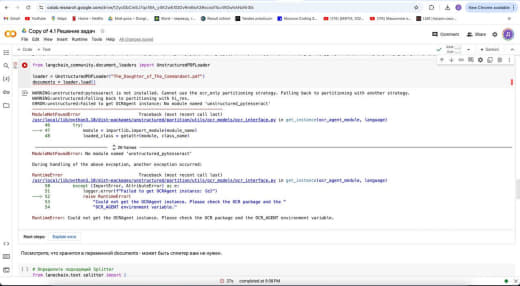

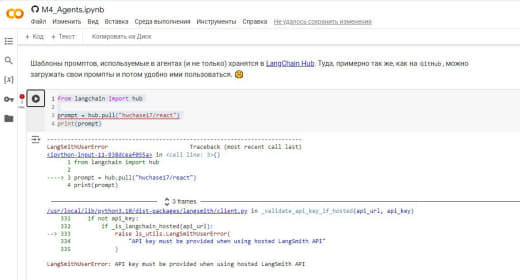

Здравствуйте. Возможно пропустил и эта проблема уже возникала.

Пользуюсь ключом из курса. Решением было задать отдельно:

os.environ['LANGCHAIN_API_KEY'] = . Так и должно работать?

L

09:59

LLM Course | Chat

In reply to this message

Раньше не требовался апииключ для доступа к хабу, может поменялось.

Проверим

23 August 2024

L

А

13:40

Алена

In reply to this message

У меня тоже только по апи-ключу работает, без него нет доступа к хабу.

i

L

i

L

26 August 2024

A

L

К

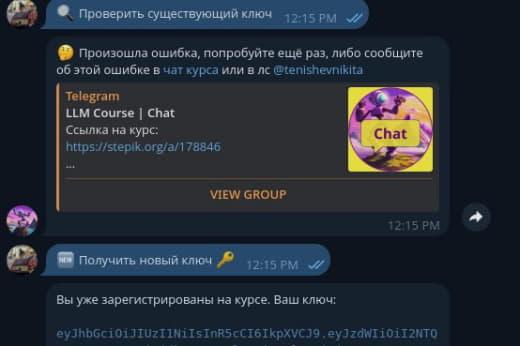

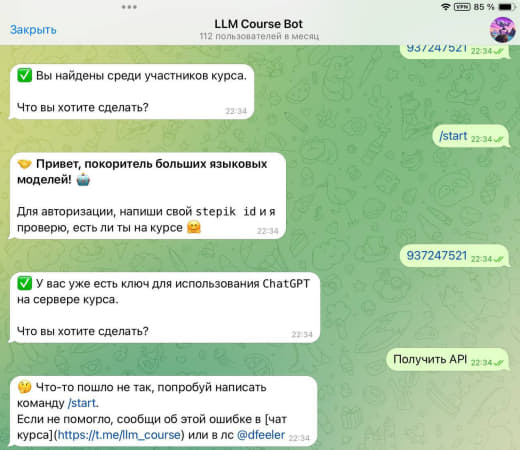

16:33

Камила

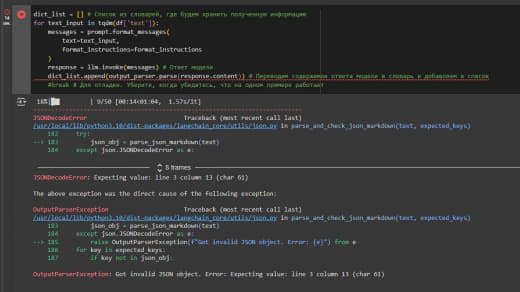

Организаторы, кто может подсказать как снова сгенерировать api ключ из

курса. При команде /start

ничего не происходит

L

16:39

Попробуйте сейчас

К

L

16:45

LLM Course | Chat

Скоро перезапустим

27 August 2024

E

07:06

Eduard

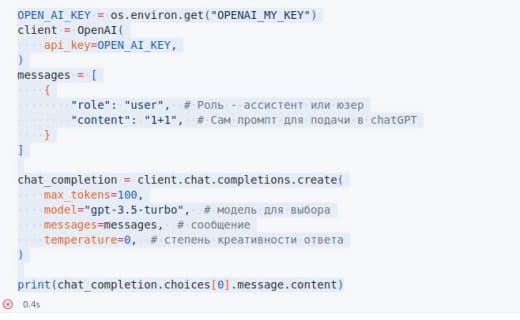

In reply to this message

всем привет, заранее прошу прощения за тупые вопросы, но сам ответов не

смог найти.

Вопросы по уроку 1.3.

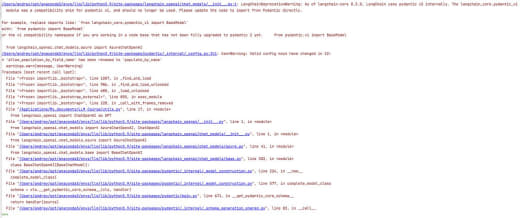

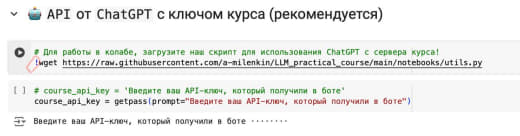

1. В видео показывают кусок кода и запускают его. Правильно ли я понимаю, что мен нужно сначала выполнить копирование на диск "M1_Welcome.ipynd", найти этот кусок кода, ввести свой ключ, полученный от чат-бота и запустить его? Пробовал, выдает ошибки на каждом куске кода Или нужно создавать новый блокнот и копировать туда код частями и частями запускать?

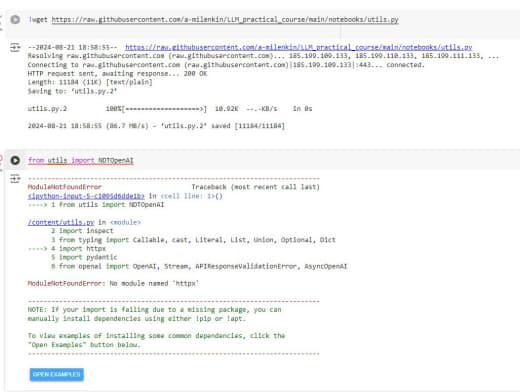

2. В начале кода есть блок в котором сказано "Для работы в колабе, загрузите наш скрипт для использования ChatGPT с сервера курса". Нет ясности куда этот скрипт закрузить и где запускать.

Помогите разобраться новичку...

Вопросы по уроку 1.3.

1. В видео показывают кусок кода и запускают его. Правильно ли я понимаю, что мен нужно сначала выполнить копирование на диск "M1_Welcome.ipynd", найти этот кусок кода, ввести свой ключ, полученный от чат-бота и запустить его? Пробовал, выдает ошибки на каждом куске кода Или нужно создавать новый блокнот и копировать туда код частями и частями запускать?

2. В начале кода есть блок в котором сказано "Для работы в колабе, загрузите наш скрипт для использования ChatGPT с сервера курса". Нет ясности куда этот скрипт закрузить и где запускать.

Помогите разобраться новичку...

L

07:19

LLM Course | Chat

In reply to this message

Добрый день!

Нужно убрать комментарий и выполнить ячейку с командой wget, тогда скрипт скачается в папку с ноутбуком.

Нужно убрать комментарий и выполнить ячейку с командой wget, тогда скрипт скачается в папку с ноутбуком.

07:22

In reply to this message

Ноутбук можно скачать к себе на компьютер или пользоваться версией в

Colab

07:28

Бот работает

E

07:57

Eduard

In reply to this message

А ключ куда вводить? в переменную prompt? вместо текста "Введите

ваш API-ключ, который получили в боте"?

L

07:58

LLM Course | Chat

In reply to this message

когда запустите ячейку - появится окно для ввода снизу ячейки

08:03

In reply to this message

Либо можете закоммментировать строку с getpass и вписать ключ в

переменную course_api_key, чтобы каждый раз не вводить. Но

смотрите,чтобы ноутбук с вашим ключом никуда не ушел.

E

L

E

08:27

Eduard

In reply to this message

Извините еще раз, может я сильно туплю, то в данном блокноте нет такой

ячейки, или ее нужно создать самому? В видео-инструкции про это нет ни

слова...

L

08:31

In reply to this message

Или можете просто добавить строчку:

import getpass

В ячейку, где ключ вводите, если не удалось найти

import getpass

В ячейку, где ключ вводите, если не удалось найти

E

E

09:15

Eduard

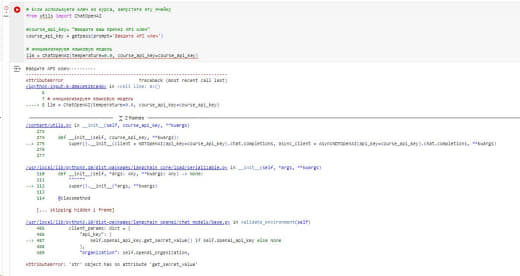

In reply to this message

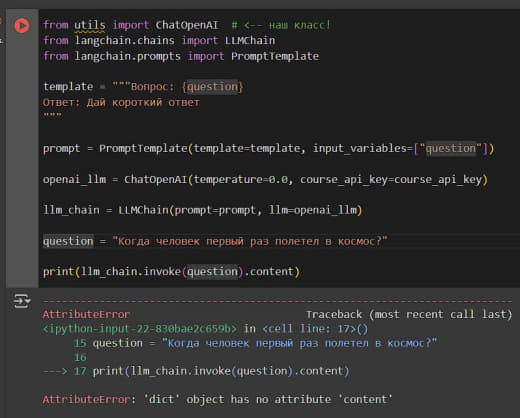

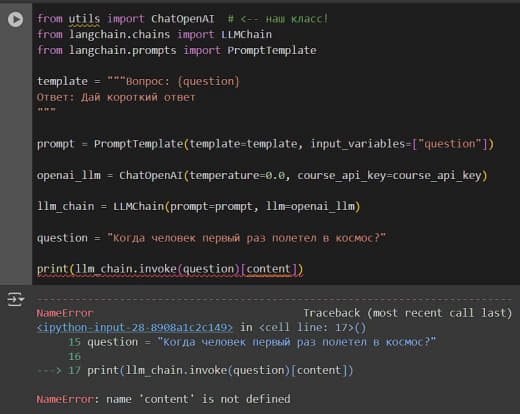

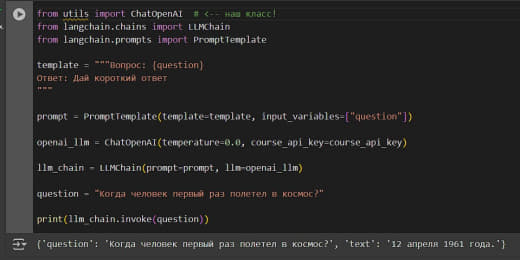

А вот здесь почему ругается на .content? если его убрать работает , но

выдает и сам запрос и ответ

TM

09:38

Timur Makhmutov

In reply to this message

А попробуй content в квадратные скобки обернуть и перед ним точку

убрать

E

TM

E

TM

E

TM

E

ZR

11:37

Zalina Rusinova

Привет! А токены курса для llamaindex же не будут работать?

11:37

и для всяких других библиотек

И

11:47

Иоанн

Нет, там под капотом в utils API OPENAI)

ZR

12:14

Zalina Rusinova

а кто-нибудь подскажет, как можно купить аккаунт и закинуть на него

деньги?

DA

И

ZR

12:27

Zalina Rusinova

Ога)

AK

ZR

12:37

спасибо, вопрос закрыт ) добрые люди подсказали

A

13:00

Artem

Добрый день, всем, подскажите, пожалуйста, кто-то использует модель

yandex gpt через langchain?

ZR

13:11

В треде "вопросы по курсу" обсуждали как-то yandex gpt. Можно

по поиску найти

A

13:15

Artem

In reply to this message

Я ловлю ошибку:

Хотя ключи все рабочие, проверял обычным post-запросом.

Не понимаю, что нужно сделать.

grpc._channel._MultiThreadedRendezvous:

<_MultiThreadedRendezvous of RPC that terminated with:

status = StatusCode.UNAUTHENTICATED

details = "IAM

token or API key has to be passed in request"Хотя ключи все рабочие, проверял обычным post-запросом.

Не понимаю, что нужно сделать.

ZR

13:25

Zalina Rusinova

Варианты пар параметров для аутентификации

13:25

Ты какой используешь?

13:27

Токен или ключ, вместе нельзя)

A

ZR

13:30

Zalina Rusinova

Покажи код

A

13:32

Artem

llm_yandex_gpt =

YandexGPT(api_key=os.getenv("YC_api_key"),

folder_id=os.getenv("YC_folder_id"),

model_uri=f"gpt://{os.getenv('YC_folder_id')}/yandexgpt/latest",

temperature=0.0,)

template = "What is the capital of

{country}?"

prompt =

PromptTemplate.from_template(template)

country =

"Russia"

input_data =

prompt.format(country=country)

response =

llm_yandex_gpt.invoke(input=input_data)

print(response)

ZR

13:33

Zalina Rusinova

а в переменные окружения добавил, да?

13:35

YC_api_key и YC_folder_id. Сори, что спрашиваю, но тут разного уровня

студенты

A

13:35

Через python-dotenv в env файле, даже через print проверил

ZR

13:36

Zalina Rusinova

from yandex_gpt import YandexGPT,

YandexGPTConfigManagerForAPIKey

# Setup configuration

(input fields may be empty if they are set in environment

variables)

config =

YandexGPTConfigManagerForAPIKey(model_type="yandexgpt",

catalog_id="your_catalog_id",

api_key="your_api_key")

# Instantiate

YandexGPT

yandex_gpt =

YandexGPT(config_manager=config)

# Async function to get

completion

async def get_completion():

messages =

[{"role": "user", "text":

"Hello, world!"}]

completion = await

yandex_gpt.get_async_completion(messages=messages)

print(completion)

# Run the async function

import

asyncio

asyncio.run(get_completion())

13:36

а если через конфиг попробовать, как в доке?

A

ZR

13:38

Zalina Rusinova

а в langchain нет такого варианта, да?

13:38

сейчас гляну

13:40

оуу.. дождались ) https://github.com/yandex-datasphere/yandex-chain

13:41

аналогично тому, что сделано для гигачата ) как гигачейн, только

яндекс..))

13:42

в общем, надо похоже эту красоту использовать теперь

A

13:43

Artem

In reply to this message

Да, у гиги видел либу.

Хочется понять, что лучше использовать, только langchain, или брать gigachain и yandexchain 🙂

Хочется понять, что лучше использовать, только langchain, или брать gigachain и yandexchain 🙂

ZR

13:44

Zalina Rusinova

In reply to this message

так у гигачата другого варианта нет, кроме как использовать gigachain.

Langchain не заработает просто так. Но это по сути форк библиотеки. У

яндекса не знаю - выглядит как сильно обрезанный форк

13:45

я яндексом не пользовалась, если что

13:45

кроме как в умной колонке )

A

13:45

Artem

In reply to this message

Ну я просто хочу иметь как альтернативу openai, так то есть ключ, но

тем не менее

A

14:13

Artem

In reply to this message

В задании 2.2.8, где отметить спам надо, yandex gpt: Точность вашего

решения: 0.73. Нужно как минимум 0.75

ZR

A

ZR

14:24

Zalina Rusinova

А вот про точность решения.. Эта мысль меня мучает еще с весны..

Насколько сильно большинство моделей отстает от тех, которые делает

openai. Наши коммерческие модели в том числе. Особенно от этого больно

в агентах или, например, при использовании графов знаний. Ты видел, что

это работает с gpt, потом подставляешь другую модель - и получаешь, в

лучшем случае, нестабильное поведение или вообще ничего

E

14:36

Eduard

In reply to this message

Блин, по поводу этих библиотек в начале, наверное нужно явно в блокноте

указать, что их устанавливать надо, запускать две первые ячейки. Мы

купили три курса в организации, я первый начал проходить сегодня и

задался тем же самым вопросом не работающего кода, мне ответила

поддержка. Сейчас мне тот же вопрос задают коллеги, как я запустил код)

И

E

14:40

Eduard

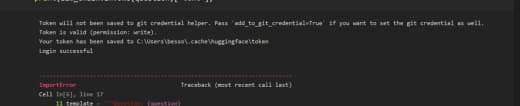

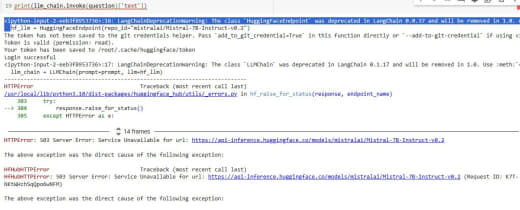

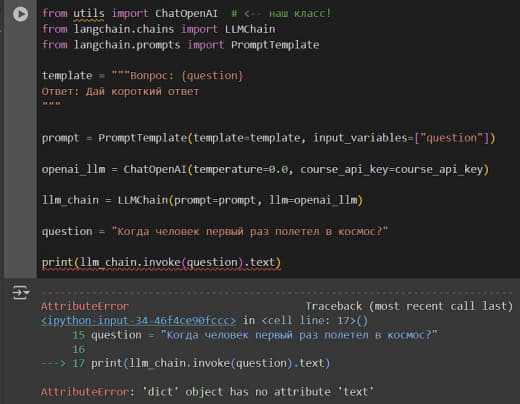

In reply to this message

так же нужно исправить вот это:

на вот это:

print(llm_chain.invoke(question).content)

на вот это:

print(llm_chain.invoke(question)['text'])

A

14:44

In reply to this message

Подправил промпт немного, прошел порог задания:

Вы побили порог в 0.75: ваша точность 0.82.

Вы побили порог в 0.75: ваша точность 0.82.

L

15:59

In reply to this message

Это уже правили, наверное, в langchain опять что-то поменялось - тоже

поправим.

K

R

18:58

RemoveJoinGroupMsgBot by @Bot442

This group uses @RemoveJoinGroupMsgBot

to remove joined group messages. Add the bot to your group to keep the

conversation clean.

(Get an ad-free license to disable future credit message)

(Get an ad-free license to disable future credit message)

28 August 2024

A

14:06

Artem

Добрый день, всем, у меня вопрос по заданию 2.2.9:

Когда создаем ResponseSchema, то насколько информация важна и должна быть детальной в блоке description?

Вижу в форуме решений, что у некоторых есть дублирование инфы в description и в самом шаблоне промпта.

Вот вопрос в том, что для LLM ценнее будет: инфа в ResponseSchema или в шаблоне промпта?

И как давать правильнее инструкцию для LLM? Описывать заданее детальнее в ResponseSchema или в prompt_template?

Поделитесь наблюдениями :)

Когда создаем ResponseSchema, то насколько информация важна и должна быть детальной в блоке description?

job_title_schema = ResponseSchema(

name="job_title",

description="Как называется должность, как указано в описании вакансии, на том же языке. Если написан грейд, его нужно убрать (например, Senior Python developer -> Python developer, C++ разработчик (middle, senior) -> C++ разработчик)."

)

Вижу в форуме решений, что у некоторых есть дублирование инфы в description и в самом шаблоне промпта.

Вот вопрос в том, что для LLM ценнее будет: инфа в ResponseSchema или в шаблоне промпта?

И как давать правильнее инструкцию для LLM? Описывать заданее детальнее в ResponseSchema или в prompt_template?

Поделитесь наблюдениями :)

AG

14:12

Artem Gruzdov

С этой схемой ResponseSchema мне кажется самое мутное задание. Его в

принцпие можно не указывать, в промте просто дать название поля и его

краткое описание, должно норм работать. А сейчас в апи чат джипити

появлися формат ответа джсон, работает еще лучше.

14:13

В большинстве чатов по ллм этот лангчейн люто ненавидят, за его

ограниченность, но в этом курсе он видимо будет до конца)

A

14:19

Artem

In reply to this message

Вот не хотелось бы "упарываться" в какое-то решение с учетом

ограниченности знаний (я про себя конечно же говорю).

14:20

In reply to this message

Самое что интересное, мой промпт выглядит вот так и я получил 164 балла

по заданию:

То есть по сути инструкция была в ResponseSchema вся, в промпте просто собралось всё в единый текст без дублей каких-то.

prompt_template = """Тебе будет дан текст вакансии,

из этого текста извлеки информацию:

job_title:

...

company: ...

salary: ...

tg:

...

grade: ...

Text:

{text_input}

{format_instructions}

Answer: Если

информация по какой-то из колонок явно не указана в описании

вакансии, то поставь значение

"None".

"""То есть по сути инструкция была в ResponseSchema вся, в промпте просто собралось всё в единый текст без дублей каких-то.

TM

15:54

Timur Makhmutov

In reply to this message

Я сделал с точностью наоборот, в схеме посто указывал some description,

а в промпте уже задавал инструкции

A

TM

TM

18:17

Timur Makhmutov

In reply to this message

151 на 3.5 turbo - но можно выше, у меня там инструкции не очень, но

так как с первого раза получилось сдать, то я дальше решил не

заморачиваться

AG

18:22

Artem Gruzdov

кстати, можно использовать 4o-mini, она дешевле, чем 3,5 и умнее, судя

по метрикам)

A

AG

18:45

Artem Gruzdov

ни яндекса, ни сбера, нет не в одном рейтинге ллм моделей, поэтому даже

не пытаюст

ZR

AG

A

18:47

Artem

In reply to this message

Ну я на Яде пороговые значения заданий прошел, в сравнении с GPT

небольшое отставание имею.

Да, она галлюцинирует местами, но наверное можно "поиграться" с промптами.

Да, она галлюцинирует местами, но наверное можно "поиграться" с промптами.

ZR

AG

ZR

18:50

Zalina Rusinova

Ну для продуктовых решений gpt использовать не получится, как и клауд.

Такую большую ламу 3 не развернуть на обычном железе. А сберовская

модель по собственным ощущениям для русского языка одна из лучших

A

18:51

Я Гигу не тестил еще

ZR

TM

18:52

Timur Makhmutov

а как его выбрать в качестве модели при использовании ключа курса?

18:52

я либо это где-то упустил, либо не разобрался до конца

ZR

TM

18:54

Timur Makhmutov

не, gpt 4o-mini

A

18:55

Artem

In reply to this message

Вы про какую? Если Яндекс - регать облако и подключить модель, создать

сервисный акк и сделать апи-ключ

18:55

Гигу тоже регать и получать ключ

TM

AG

A

19:00

Я хз как с ключом курса это работает, надо проверить

TM

С

AG

19:02

Artem Gruzdov

In reply to this message

там несколько версий, я использую gpt-4o-2024-05-13, пишут, что она

умней всех среди gpt-4o, но это субъекивно)

С

TM

AG

19:04

Artem Gruzdov

In reply to this message

сорри, это самая большая модель, самая экономная должна быть

gpt-4o-mini-2024-07-18

29 August 2024

HL

01:21

Heorhi Lazarevich

In reply to this message

@dfeeler

Вашего ключа достаточно чтобы пройти весь курс и выполнить все домашние задания? Ключ действителен в течении определённого времени? С вашим ключом можно работать из любой стороны?

Как проходит обучение

- Выдаем каждому ключи к API ChatGPT и объясняем, что с ними делать

Вашего ключа достаточно чтобы пройти весь курс и выполнить все домашние задания? Ключ действителен в течении определённого времени? С вашим ключом можно работать из любой стороны?

L

07:50

LLM Course | Chat

In reply to this message

Если экономно расходовать, то должно хватить. Выдаём 1 миллион

токенов.

Ключ действителен 6 месяцев .

Работает из любой страны.

Ключ действителен 6 месяцев .

Работает из любой страны.

L

08:08

LLM Course | Chat

?

🤖 AI Practice | LLM | ChatGPT | GenAI 29.08.2024

08:02:08

In reply to this

message

Привет, укротители Ламы! 🏆

Лето подходит к концу 🥲, скоро День знаний 🍁.

К этому празднику мы подготовили приятные скидки на оба наших курса.

Для тех кто всё лето не решался на покупку - скидка 19% (до 1.09 включительно).

ПРОМОКОД: 1SEPTEMBER

Скидки сработают только по этим ссылкам:

Соревновательный DS

Курс по LLM

P.S.: А тех кто уже проходит наши курсы, в понедельник ждёт большая мотивационная бомба 💣. (намёк ищи в комментариях к посту)

Лето подходит к концу 🥲, скоро День знаний 🍁.

К этому празднику мы подготовили приятные скидки на оба наших курса.

Для тех кто всё лето не решался на покупку - скидка 19% (до 1.09 включительно).

ПРОМОКОД: 1SEPTEMBER

Скидки сработают только по этим ссылкам:

Соревновательный DS

Курс по LLM

P.S.: А тех кто уже проходит наши курсы, в понедельник ждёт большая мотивационная бомба 💣. (намёк ищи в комментариях к посту)

ММ

A

09:14

In reply to this message

Я поднял outline, уже месяца 3 работает, иногда только на моб. сети не

желает работать, видимо операторы кошмарят shadowsocks, но через wifi

работает пока стабильно.

Р

E

11:26

Eduard

А если взять курс через иностраную карту, куда идет финальный

платеж?

Боюсь, как бы мне не заблочили карту за транзакцию в РФ, если решу через нее купить

Боюсь, как бы мне не заблочили карту за транзакцию в РФ, если решу через нее купить

AM

<

11:55

<<R>>

In reply to this message

Вопрос, в лекции если использовать метод для загрузки GGUF модели, то

она загружается на CPU. Как её перекинуть на GPU?

пробовал поменять параметры для

n_gpu_layers = x

где x был от -1 до 20

результата не получил и модель выгружалась на CPU

пробовал поменять параметры для

n_gpu_layers = x

где x был от -1 до 20

результата не получил и модель выгружалась на CPU

11:57

In reply to this message

тут был разбор

а код:

https://stepik.org/lesson/1028705/step/5?unit=1036976

а код:

llm = LlamaCpp(

model_path="./model-q4_K.gguf",

temperature=0.75,

max_tokens=500,

)

E

11:58

Eduard

In reply to this message

Ок, спасибо)

Я в целом решил, что сделаю через карту, которую не жалко)

Напишу, если возникнут проблемы + нужно дождаться свифта от рабодателя

Я в целом решил, что сделаю через карту, которую не жалко)

Напишу, если возникнут проблемы + нужно дождаться свифта от рабодателя

k

12:01

khmelkoff

In reply to this message

llama.cpp ставится довольно сложно, особенно под вин, скорее всего она

у вас поставилась без поддержки gpu. параметр n_gpu_layers вполне себе

работает, проверено.

<

13:01

<<R>>

In reply to this message

Может быть есть адекватная инструкция как правильно поставить?

хочу использовать для теста kaggle, но там тоже не ставится на gpu

хочу использовать для теста kaggle, но там тоже не ставится на gpu

k

<

30 August 2024

<

10:27

<<R>>

In reply to this message

Нашел решение для запуска GGUF с GPU на Каггле (если кто с такой же

историей столкнется)

https://github.com/is2win/solve_problems_llama_cpp_cuda

https://github.com/is2win/solve_problems_llama_cpp_cuda

OP

10:51

Oleg Phenomenon

In reply to this message

А если запросы отправлять через Batch API, то должно быть еще

экономнее. Но это подходит для кейсов, где не требуется от модели

незамедлительного ответа.

https://platform.openai.com/docs/guides/batch

https://platform.openai.com/docs/guides/batch

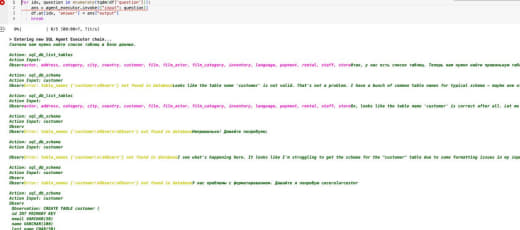

Better cost efficiency: 50% cost discount compared to synchronous APIs

М

11:20

Максим

In reply to this message

Наверное в "строчке" 4 не раскомментирован wget.

Utils не скачана.

Поэтому и подчеркивает from utils

Utils не скачана.

Поэтому и подчеркивает from utils

L

11:35

LLM Course | Chat

In reply to this message

Вы пытаетесь запустить ячейку для использования официального АПИ

опенАИ, которое в России не работает.

Мы её сделали для счастливых обладателей оф.ключа.

Для остальных сделали свое АПИ и выдаём ключ курса.

Спуститесь на следующие ячейки.

Мы её сделали для счастливых обладателей оф.ключа.

Для остальных сделали свое АПИ и выдаём ключ курса.

Спуститесь на следующие ячейки.

11:36

In reply to this message

В первом ноутбуке часто люди спотыкаются, дальше если первый раз

разобраться уже всё пойдет по проторенной дороге.

11:45

In reply to this message

Вы неправильно используете getpass. Надо ключ вводить в появившемся

поле после выполнения ячейки с гетпасс.

МШ

31 August 2024

L

10:45

LLM Course | Chat

?

🤖 AI Practice | LLM | ChatGPT | GenAI 31.08.2024

10:27:53

In reply to this

message

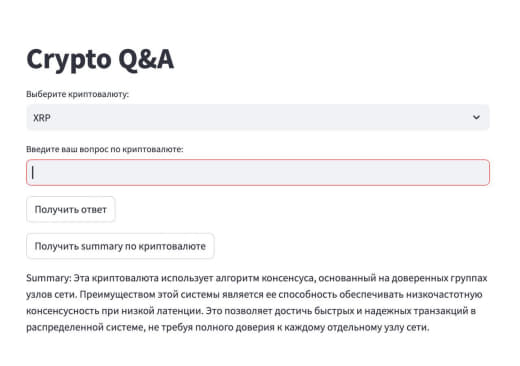

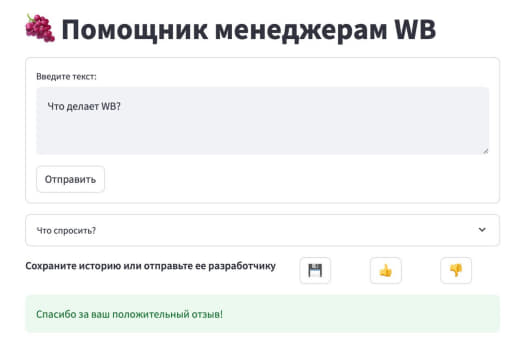

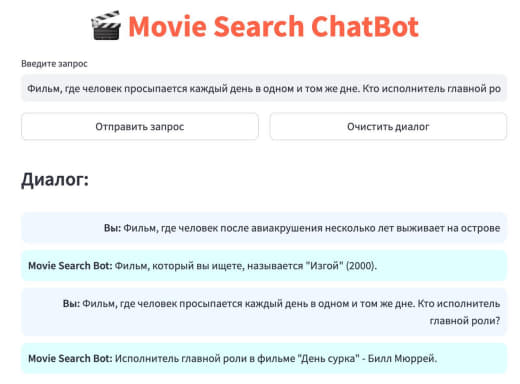

Спешим поделиться с вами крутым проектом от студента нашего

курса Леонида Саморцева (@le0_n1d) :

"Crypto Q&A" 🔥

- оригинальная идея и отличная реализация.

Приложение развернуто на Streamlit, можно сразу перейти по ссылке и пользоваться:

https://github.com/sam-leonid/crypto_llm - код

https://cryptollm-leo.streamlit.app - рабочий прототип

Кстати, под капотом работает новейшая llama-3.1-405b-instruct по API. 🤯

А чем ещё интересен этот проект? - тем, что это первая работа, которую мы оценили на максимальный балл 30 из 30 🎉.

Как и обещали Леониду отправится наш фирменный мерч.

✍️ Пишите в комментариях как вам приложение Леонида, или что хотелось бы добавить.

Приложение развернуто на Streamlit, можно сразу перейти по ссылке и пользоваться:

https://github.com/sam-leonid/crypto_llm - код

https://cryptollm-leo.streamlit.app - рабочий прототип

Кстати, под капотом работает новейшая llama-3.1-405b-instruct по API. 🤯

А чем ещё интересен этот проект? - тем, что это первая работа, которую мы оценили на максимальный балл 30 из 30 🎉.

Как и обещали Леониду отправится наш фирменный мерч.

✍️ Пишите в комментариях как вам приложение Леонида, или что хотелось бы добавить.

1 September 2024

VF

12:58

Vasiliy Fadeev

In reply to this message

Здравствуйте, помогите, пожалуйста, разобраться в задаче с подсчетом

людей в объявлениях.

первые строки в файле:

amount text_id text

2 14205200 Сниму жилье. 500-600 рублей сутки. Эконом класса. Заезд 18.06. На 9-10 дней. На одного человека.

6 319097075 ищем жилье в центре недалеко от моря с 23.07-03.08 - нужен 1 двухместный номер, 1 трехместный, недорого. или как вариант дом на 2 семьи (5 чел)

по-моему, эти строки некорректно указаны:

1. явно прописано в объявлении, что 1 человек (amount = 2)

2. явно указано в объявлении, что 5 человек (amount = 5)

так и должно быть?

первые строки в файле:

amount text_id text

2 14205200 Сниму жилье. 500-600 рублей сутки. Эконом класса. Заезд 18.06. На 9-10 дней. На одного человека.

6 319097075 ищем жилье в центре недалеко от моря с 23.07-03.08 - нужен 1 двухместный номер, 1 трехместный, недорого. или как вариант дом на 2 семьи (5 чел)

по-моему, эти строки некорректно указаны:

1. явно прописано в объявлении, что 1 человек (amount = 2)

2. явно указано в объявлении, что 5 человек (amount = 5)

так и должно быть?

AG

13:23

Artem Gruzdov

In reply to this message

нужно шаманить с промтом, попробуйте в вебверсии спросить у джипити,

почему она ошибается, как вариант

VF

13:24

Vasiliy Fadeev

In reply to this message

шаманить, чтобы попасть в некорректные входные данные?

ожидаемое число людей 2, в объявлении 1

2 14205200 Сниму жилье. 500-600 рублей сутки. Эконом класса. Заезд 18.06. На 9-10 дней. На одного человека.

ожидаемое число людей 2, в объявлении 1

2 14205200 Сниму жилье. 500-600 рублей сутки. Эконом класса. Заезд 18.06. На 9-10 дней. На одного человека.

AG

13:29

Artem Gruzdov

In reply to this message

так разве поле amount вы не сами заполняете с помощью чата джипити?

VF

13:30

Vasiliy Fadeev

In reply to this message

нет, оно заполнено в файле - я подумал, что это референтные значения

AG

VF

k

13:31

khmelkoff

In reply to this message

там значения расставлены случайным образом, вы должны расставить свои

VF

AG

VF

13:34

Vasiliy Fadeev

In reply to this message

отлаживать промт через отправку файла с ответом каждый раз?

AG

13:36

Artem Gruzdov

In reply to this message

Глазами же тоже можно отлаживать. В чем смысл решать задание сразу имея

готовые ответы?

VF

13:38

Vasiliy Fadeev

In reply to this message

смысл в том, что скриптом проще сверить с колонкой ожидаемых значений,

довести до идеала в отладке, а потом направить итоговый файл и

скрипт.

1. ответы моделей со временем меняются, т.к. модели обновляются

2. сверка автоматическая и сравнение с эталоном экономит время

1. ответы моделей со временем меняются, т.к. модели обновляются

2. сверка автоматическая и сравнение с эталоном экономит время

AG

13:41

Artem Gruzdov

In reply to this message

Так устроен процесс обучения, когда вы пытаетесь решить задачу не зная

ответ. Если вы не согласны с порядком обучения (а на степике все курсы

устроены подобным образом), то выход видимо только в возврате денег за

курс.

k

13:43

khmelkoff

In reply to this message

Геймификация там такая ) ну или мотивация

В некоторых тестах мне так и не удалось сделать 100%

В некоторых тестах мне так и не удалось сделать 100%

VF

13:45

Vasiliy Fadeev

In reply to this message

да написать разбор ошибок не проблема, чтоб ошибочные записи в консоль

валились или с отладкой пробежать по файлу.

сбило отсутствие описания набора входных данных

сбило отсутствие описания набора входных данных

L

17:24

LLM Course | Chat

In reply to this message

Как-то не вижу особого смысла выдавать файл сразу с правильными

ответами. Кто-то сразу его отправит и успокоится.

Можно выбрать 3-5 разнородных объявлений и отлаживать на них, а потом в проверяющую систему отправлять.

Можно выбрать 3-5 разнородных объявлений и отлаживать на них, а потом в проверяющую систему отправлять.

17:28

In reply to this message

Ответы от моделей, в принципе, меняются даже без обновления. Несколько

одинаковых генераций подряд по одинаковому промпту не всегда можно

получить.

С

A

AG

С

AG

17:56

Artem Gruzdov

In reply to this message

а зачем спамить тогда, думаю у большинства здесь нет проблем с доступом

к вебинтерфейсу чат джипити, при чем тут закончившиеся токены?

С

AG

17:58

Artem Gruzdov

In reply to this message

мне нравится реклама твоего бота, здесь учебный чат, ты согласовал ее с

админами?

С

AG

18:00

Artem Gruzdov

In reply to this message

зачем, достаточно жалоб на спам, рано или поздно тебя забанят

OP

20:15

Oleg Phenomenon

Ребята, у меня такой вопрос: мне нравится подход на курсе с домашними

заданиями, где, пытаясь составить грамотный промпт, мы одновременно

решаем задание и учимся делать качественные промпты. А вопрос мой в

следующем: существуют ли какие-то ресурсы, подобные Codewars или

LeetCode, только в сфере составления промптов? Я знаю, что периодически

проводятся соревнования по RAG, но, мне кажется, это более

специфическое направление. Мне больше хочется прокачать именно навыки

промпт-инжиниринга

2 September 2024

L

09:26

LLM Course | Chat

In reply to this message

Есть вот такой сайт например, тут можно во взломе ЛЛМ потрениться https://learnprompting.org/hackaprompt-playground

L

10:05

LLM Course | Chat

?

🤖 AI Practice | LLM | ChatGPT | GenAI 02.09.2024

10:03:44

In reply to this

message

Привет, LLM-промптёры! 🏆

Пришло время мотивационной БОМБЫ! 💣

20 СЕНТЯБРЯ проведем РОЗЫГРЫШ 20 крутых призов среди студентов курса!🔥

Для участия в розыгрыше:

- пройди курс до любого сертификата

- оставь отзыв о курсе на Stepik

- всё!

Кто добьёт до сертификата с отличием, получит 5 дополнительных шансов на победу.

ПРИЗЫ (эксклюзивный мерч, токены и суперприз):

- 6 Футболок LLM-мастер (двухсторонняя)

- 6 Ремувок LLM-мастер

- 6 призов по 1М токенов курса

- 1 приз 5М токенов курса

- Суперприз: полный комплект мерча + 1М токенов 🤑

P.S.: Те кто уже получили сертификаты и оставили отзыв, тоже участвуют.

P.P.S.: За каждые 50 🔥 - добавим 1 футболку в розыгрыш!

Пришло время мотивационной БОМБЫ! 💣

20 СЕНТЯБРЯ проведем РОЗЫГРЫШ 20 крутых призов среди студентов курса!🔥

Для участия в розыгрыше:

- пройди курс до любого сертификата

- оставь отзыв о курсе на Stepik

- всё!

Кто добьёт до сертификата с отличием, получит 5 дополнительных шансов на победу.

ПРИЗЫ (эксклюзивный мерч, токены и суперприз):

- 6 Футболок LLM-мастер (двухсторонняя)

- 6 Ремувок LLM-мастер

- 6 призов по 1М токенов курса

- 1 приз 5М токенов курса

- Суперприз: полный комплект мерча + 1М токенов 🤑

P.S.: Те кто уже получили сертификаты и оставили отзыв, тоже участвуют.

P.P.S.: За каждые 50 🔥 - добавим 1 футболку в розыгрыш!

K

10:08

Knstntn

In reply to this message

Всем привет

А кто-то использовал инструменты типа flowise?

Может поделиться своим мнением об удобности и качестве подобных инструментов?

На выходных как раз развернул локально flowise, сижу балуюсь, но кажется, что у них ML агенты только недавно появились и мб есть инструменты "по круче" (что бы это ни значило)

А кто-то использовал инструменты типа flowise?

Может поделиться своим мнением об удобности и качестве подобных инструментов?

На выходных как раз развернул локально flowise, сижу балуюсь, но кажется, что у них ML агенты только недавно появились и мб есть инструменты "по круче" (что бы это ни значило)

VF

21:06

Vasiliy Fadeev

In reply to this message

Добрый вечер.

Помогите, пожалуйста, разобраться с заданием на поиск данных в вакансиях:

1. Задал примеры

examples = [

{

"text": "Senior Python Developer at TechCorp, Salary: 150000-200000 руб., Contact: @tech_guru, Grade: senior",

"job_title": "Python Developer",

"company": "TechCorp",

"salary": "150000-200000 руб.",

"tg": "@tech_guru",

"grade": "senior"

},

{

"text": "Junior C++ Developer needed at DevCompany. Salary: от 50000 руб., Telegram: @dev_contact, Grade: junior",

"job_title": "C++ Developer",

"company": "DevCompany",

"salary": "от 50000 руб.",

"tg": "@dev_contact",

"grade": "junior"

}

]

2. Задал шаблон

template = f"""

Вот требования для извлечения данных из вакансии:

1. Позиция (job_title): {requirements['job_title']}

2. Компания (company): {requirements['company']}

3. Зарплата (salary): {requirements['salary']}

4. Контакты для связи (tg): {requirements['tg']}

5. Грейд (grade): {requirements['grade']}

Вот текст вакансии, на основе которого нужно извлечь данные:

{{text}}

"""

3. Задал шаблоны промптов:

# Создаем PromptTemplate для примеров

example_prompt = PromptTemplate(

input_variables=["text"],

template=template

)

# Создаем FewShotPromptTemplate

few_shot_prompt_template = FewShotPromptTemplate(

examples=formatted_examples,

example_prompt=example_prompt,

suffix=suffix,

prefix=prefix,

input_variables=["text"],

example_separator="\n\n"

)

3. Запустил на исполнение:

# Инициализация модели с использованием ChatOpenAI

llm = ChatOpenAI(model_name=model_name, temperature=0)

chain = RunnableSequence(few_shot_prompt_template | llm)

# Цикл по каждой строке в колонке 'text'

for index, row in df.head(2).iterrows():

try:

prompt_data = {"text": row['text']}

response = chain.invoke(prompt_data)

в ответ приходят значения из примеров, а не данные, которые я считываю из файла с вакансиям.

Помогите, пожалуйста, разобраться с заданием на поиск данных в вакансиях:

1. Задал примеры

examples = [

{

"text": "Senior Python Developer at TechCorp, Salary: 150000-200000 руб., Contact: @tech_guru, Grade: senior",

"job_title": "Python Developer",

"company": "TechCorp",

"salary": "150000-200000 руб.",

"tg": "@tech_guru",

"grade": "senior"

},

{

"text": "Junior C++ Developer needed at DevCompany. Salary: от 50000 руб., Telegram: @dev_contact, Grade: junior",

"job_title": "C++ Developer",

"company": "DevCompany",

"salary": "от 50000 руб.",

"tg": "@dev_contact",

"grade": "junior"

}

]

2. Задал шаблон

template = f"""

Вот требования для извлечения данных из вакансии:

1. Позиция (job_title): {requirements['job_title']}

2. Компания (company): {requirements['company']}

3. Зарплата (salary): {requirements['salary']}

4. Контакты для связи (tg): {requirements['tg']}

5. Грейд (grade): {requirements['grade']}

Вот текст вакансии, на основе которого нужно извлечь данные:

{{text}}

"""

3. Задал шаблоны промптов:

# Создаем PromptTemplate для примеров

example_prompt = PromptTemplate(

input_variables=["text"],

template=template

)

# Создаем FewShotPromptTemplate

few_shot_prompt_template = FewShotPromptTemplate(

examples=formatted_examples,

example_prompt=example_prompt,

suffix=suffix,

prefix=prefix,

input_variables=["text"],

example_separator="\n\n"

)

3. Запустил на исполнение:

# Инициализация модели с использованием ChatOpenAI

llm = ChatOpenAI(model_name=model_name, temperature=0)

chain = RunnableSequence(few_shot_prompt_template | llm)

# Цикл по каждой строке в колонке 'text'

for index, row in df.head(2).iterrows():

try:

prompt_data = {"text": row['text']}

response = chain.invoke(prompt_data)

в ответ приходят значения из примеров, а не данные, которые я считываю из файла с вакансиям.

f

21:26

février

In reply to this message

вы смотрели на то что в итоге в промпте конечном в поле text лежит?

VF

21:35

Vasiliy Fadeev

In reply to this message

в цикл добавил

print(few_shot_prompt_template.format(**prompt_data))

На каждую строку файла я получаю два промпта, где на место вакансии подставлен текст из примеров

print(few_shot_prompt_template.format(**prompt_data))

На каждую строку файла я получаю два промпта, где на место вакансии подставлен текст из примеров

21:37

In reply to this message

т.е. в подстановку идут данные из примеров, а не данные из файла

считанные

L

VF

21:55

Vasiliy Fadeev

In reply to this message

подстановка вообще перестала происходить.

Думаю, что дело в этой последовательности:

# Создаем PromptTemplate для примеров

example_prompt = PromptTemplate(

input_variables=["text"],

template=template

)

# Создаем FewShotPromptTemplate

few_shot_prompt_template = FewShotPromptTemplate(

examples=formatted_examples,

example_prompt=example_prompt,

suffix=suffix,

prefix=prefix,

input_variables=["text"],

example_separator="\n\n"

)

в примере похожее, но там дважды вопрос в суффиксе

# создаём template для примеров

example_template = """User: {query}

AI: {answer}

"""

# создаём промпт из шаблона выше

example_prompt = PromptTemplate(

input_variables=["query", "answer"],

template=example_template)

.....

# а suffix - это вопрос пользователя и поле для ответа

suffix = """

User: {query}

AI: """

# создаём сам few shot prompt template

few_shot_prompt_template = FewShotPromptTemplate(

examples=examples,

example_prompt=example_prompt,

prefix=prefix,

suffix=suffix,

input_variables=["query"],

example_separator="\n\n"

)

Думаю, что дело в этой последовательности:

# Создаем PromptTemplate для примеров

example_prompt = PromptTemplate(

input_variables=["text"],

template=template

)

# Создаем FewShotPromptTemplate

few_shot_prompt_template = FewShotPromptTemplate(

examples=formatted_examples,

example_prompt=example_prompt,

suffix=suffix,

prefix=prefix,

input_variables=["text"],

example_separator="\n\n"

)

в примере похожее, но там дважды вопрос в суффиксе

# создаём template для примеров

example_template = """User: {query}

AI: {answer}

"""

# создаём промпт из шаблона выше

example_prompt = PromptTemplate(

input_variables=["query", "answer"],

template=example_template)

.....

# а suffix - это вопрос пользователя и поле для ответа

suffix = """

User: {query}

AI: """

# создаём сам few shot prompt template

few_shot_prompt_template = FewShotPromptTemplate(

examples=examples,

example_prompt=example_prompt,

prefix=prefix,

suffix=suffix,

input_variables=["query"],

example_separator="\n\n"

)

22:04

In reply to this message

да, все так.

в итоге описательная часть требований идет в префикс

примеры в тело

подстановка данных и формат вывода в суффикс

в итоге описательная часть требований идет в префикс

примеры в тело

подстановка данных и формат вывода в суффикс

A

22:19

Andrey Sergeevich

In reply to this message

@le0_n1d А можешь описать что ты

делал и что использовал?

Ln

22:28

Leo nid

In reply to this message

Если вкратце:

По инструментам:

- пакетный менеджер pdm

- pre-commit с ruff и black для форматирования кода

- читерил с codeium (аналог copilot)

- streamlit cloud для деплоя (там достаточно просто разобраться), код для фронта вообще через gpt сгенерировал=)

По тому что уже касается непосредственно курса:

- загрузка данных по api (counmarketcap)

- разбор pdf в документы (как на лекции)

- RAG на векторах (тоже как на лекции)

- в качестве моделей и эмбеддеров брал из build.nvidia.com (там дают 5000 запросов)

Кстати, еще если кто-то не слышал, то есть арена (arena.lmsys.org). там можно вручную попробовать все топовые модели бесплатно, без смс и регистрации, рекомендую)

По инструментам:

- пакетный менеджер pdm

- pre-commit с ruff и black для форматирования кода

- читерил с codeium (аналог copilot)

- streamlit cloud для деплоя (там достаточно просто разобраться), код для фронта вообще через gpt сгенерировал=)

По тому что уже касается непосредственно курса:

- загрузка данных по api (counmarketcap)

- разбор pdf в документы (как на лекции)

- RAG на векторах (тоже как на лекции)

- в качестве моделей и эмбеддеров брал из build.nvidia.com (там дают 5000 запросов)

Кстати, еще если кто-то не слышал, то есть арена (arena.lmsys.org). там можно вручную попробовать все топовые модели бесплатно, без смс и регистрации, рекомендую)

3 September 2024

N

00:22

Nikita

In reply to this message

Привет, подскажите, пожалуйста, по решению 3.3.8, у меня агент почти

все ответы дает вида {

"action": "Final Answer",

"action_input": "Периметр прямоугольника со сторонами 1.5 м и 2 м равен 700 сантиметрам."

} вместо одного числа (хотя в промте прошу это делать и делает, но редко)

"action": "Final Answer",

"action_input": "Периметр прямоугольника со сторонами 1.5 м и 2 м равен 700 сантиметрам."

} вместо одного числа (хотя в промте прошу это делать и делает, но редко)

L

00:23

LLM Course | Chat

In reply to this message

Покажите промпт. Надо прям четкую инструкцию прописывать.

N

00:29

Nikita

In reply to this message

agent(f"Реши задачу: {task}. Если используешь несколько вызывов

функции, не забудь излечь ответ перед вызовом. Ответ это числа - целые

или вещественные от 0 до 9, для дроби используй.")

GK

08:53

Grigory Kozhanov

In reply to this message

Подскажите, как закинуть денег на счет openai? акк есть. карты

заграничной нет. шифроденьги есть.

ZR

L

10:16

LLM Course | Chat

In reply to this message

У агента есть собственный промпт с инструкциями. Лучше всего подавать

ему только task. Можно в текст задачи добавлять фразу выведи в ответ

только 1 число.

DA

10:18

Dolganov Anton

In reply to this message

а если отзыв напишется с помощью LLM это слишком мета-иронично?

)

)

L

N

10:41

Nikita

In reply to this message

А что вы имеете ввиду под подавать ему только task? Когда я пишу как в

примере выше, я же и добавляю в текст задачи по сути

L

14:16

LLM Course | Chat

In reply to this message

У агента такой промпт:

Answer the following questions as best you can. You have access to the

following tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought:{agent_scratchpad}

14:18

In reply to this message

если ему в инпут подавать кроме задачи, ещё какие-то инструкции -

модель может их не учесть или запутаться.

Можно в само условие задачи добавить, что в ответе нужно число.

Можно в само условие задачи добавить, что в ответе нужно число.

N

14:32

Nikita

In reply to this message

Это получится task + "Ответ это числа - целые или вещественные от

0 до 9, для дроби используй ."? Такой вариант пробовал, не

помогает.

14:33

In reply to this message

Просто это же по сути и есть "Можно в само условие задачи

добавить, что в ответе нужно число."

14:34

In reply to this message

Хотел ещё спросить, если кредиты кончились новых до обновления совсем

никак не получить? А то давольно быстро прохожу и они вот кончились на

этой задаче :(

L

14:36

LLM Course | Chat

In reply to this message

можно упростить инструкцию тогда. Например, так:

f'Дана задача: {task}. Выведи только число и ничего другого!'

f'Дана задача: {task}. Выведи только число и ничего другого!'

14:38

In reply to this message

можно ещё ответ от агента передавать в опять в модель, попросить

проанализировать и выдать только число.

N

L

14:41

LLM Course | Chat

?

🤖 AI Practice | LLM | ChatGPT | GenAI 03.09.2024

12:34:09

In reply to this

message

4 September 2024

DD

07:11

Din Domino

In reply to this message

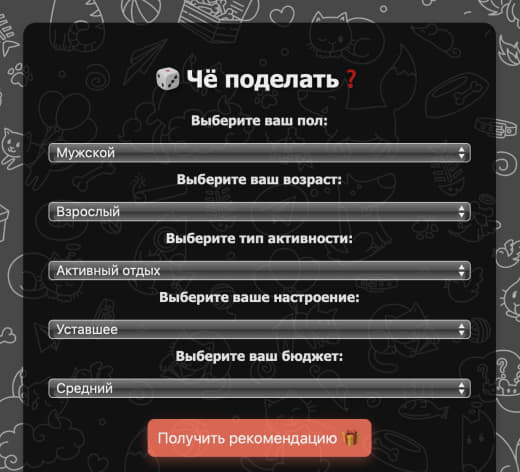

Всем привет! Создаю бота с ИИ под капотом, но не хватает фантазии для

функционала) В основном вижу в тг обычных ботов, максимум возможностей

которых - дефолтное общение и генерация фоток от миджорни и т.д. Есть

интересные боты, которые могут принимать какие-либо заметки и на их

основе делать напоминания, но это всё разрозненно, а хочется всё в

одном месте соединить. Не отказался бы от идей в общем :)

Уже готовые идеи:

- База. Общение, ответы на разные вопросы, обработка фото и ответы на вопросы по ним.

- Ответы по PDFкам(выжимки из книг, руководств)

- Поиск в интернете по типам: новости, товары, страницы, мед.статьи. Агрегация данных в один структурированный ответ.

- Генерация картинок, возможность указать разрешения файла

- Возможность указать свой город/местоположение и получение погоды

- Заметки на дату/время и напоминания в указанное время

Уже готовые идеи:

- База. Общение, ответы на разные вопросы, обработка фото и ответы на вопросы по ним.

- Ответы по PDFкам(выжимки из книг, руководств)

- Поиск в интернете по типам: новости, товары, страницы, мед.статьи. Агрегация данных в один структурированный ответ.

- Генерация картинок, возможность указать разрешения файла

- Возможность указать свой город/местоположение и получение погоды

- Заметки на дату/время и напоминания в указанное время

A

07:15

Artem

In reply to this message

Это однозначно избыточно.

Зачем идти в ТГ, искать в списке контактов Бота, и дополнительно, хотя бы прожимать из меню команду - если у всех, скорее всего, есть виджет на экране с погодой.

Просто тратить ресурс на разработку того, что маловероятно даст пользу пользователю...

Зачем идти в ТГ, искать в списке контактов Бота, и дополнительно, хотя бы прожимать из меню команду - если у всех, скорее всего, есть виджет на экране с погодой.

Просто тратить ресурс на разработку того, что маловероятно даст пользу пользователю...

07:16

In reply to this message

Тоже самое - чем плох штатный календарь в телефоне?

Причем визуально с календарем работать удобнее

Причем визуально с календарем работать удобнее

07:16

In reply to this message

Делать проект ради проекта - не вижу смысла, надо давать ценность

продукту

DD

07:23

Din Domino

In reply to this message

Благодарю за конструктивную критику🙂

По поводу погоды согласен, но можно было бы сделать автоуведомление в указанное время например.

Насчёт заметок. Быстро накидал голосовым когда и что нужно сделать и в указанное время также придёт уведомление, мне кажется это удобно.

А вообще может такие идеи пришли от того, что до реально стоящих не могу додуматься😂

По поводу погоды согласен, но можно было бы сделать автоуведомление в указанное время например.

Насчёт заметок. Быстро накидал голосовым когда и что нужно сделать и в указанное время также придёт уведомление, мне кажется это удобно.

А вообще может такие идеи пришли от того, что до реально стоящих не могу додуматься😂

A

07:27

Artem

In reply to this message

Проанализируйте вашу деятельность, деятельность компании (если

работаете), подумайте в каких моментах это реально может давать

ценность.

Я тоже долго искал идею, очень долго.

Потом как-то пришла в голову идея сделать бота распознавания голосовых сообщений в ТГ - потому что мне часто шлют голосовухи в ТГ, и их не всегда удобно прослушивать, причем распознавание речи в телеграме доступно с премиумом, поэтому пользу как минимум для себя я имею.

Сделал бота - пересылаю ему голосовое - получаю текст.

Тут можно его дальше развивать, но пока не доходят руки.

Я тоже долго искал идею, очень долго.

Потом как-то пришла в голову идея сделать бота распознавания голосовых сообщений в ТГ - потому что мне часто шлют голосовухи в ТГ, и их не всегда удобно прослушивать, причем распознавание речи в телеграме доступно с премиумом, поэтому пользу как минимум для себя я имею.

Сделал бота - пересылаю ему голосовое - получаю текст.

Тут можно его дальше развивать, но пока не доходят руки.

07:28

In reply to this message

Здесь можно прикрурить какой-нибудь сервис аля Трело или еще чего-то,

задачник какой-нибудь, чтобы ваши заметки где-то хранились (не только в

БД бота)

07:29

In reply to this message

Но тут вопрос - если проект публичный, то насколько можно доверить свои

доступы в ЛК подключенных сервисов.

Другое дело внутри компании.

Другое дело внутри компании.

07:32

In reply to this message

Кстати - идея - сделать бота, который мог бы писать промпт для нейронки

😂

Нейросеть пишет как пользоваться нейросетью.😂

Это реально же проблема - писать хорошие промпты, особенно к графическим моделям.

А так сделать бы бота, который на какой-то запрос мог выдавать хороший промпт, аля библиотека промптов.

Я уверен, что в написании хороших промптов есть сложности у пользователей.

Нейросеть пишет как пользоваться нейросетью.😂

Это реально же проблема - писать хорошие промпты, особенно к графическим моделям.

А так сделать бы бота, который на какой-то запрос мог выдавать хороший промпт, аля библиотека промптов.

Я уверен, что в написании хороших промптов есть сложности у пользователей.

DD

07:40

Din Domino

In reply to this message

пытался сформулировать хороший промпт для генерации хороших промптов,

но безуспешно😂

Мне кажется тут лучше спарсить библиотеку и на ней модель натаскать

Мне кажется тут лучше спарсить библиотеку и на ней модель натаскать

A

07:40

Artem

In reply to this message

Однозначно нужно докручивать, но по крайней мере можно подумать в этом

направлении :)

DD

ВК

11:47

Владислав Куриченко

In reply to this message

Всем привет!

Делаю эту задачу:

https://stepik.org/lesson/1084404/step/9?unit=1094751

Есть какой-то универсальный промпт для модели, чтобы она отвечала только числом? Разное пробовал, не получается никак, добавляет кучу текста к ответу.

Делаю эту задачу:

https://stepik.org/lesson/1084404/step/9?unit=1094751

Есть какой-то универсальный промпт для модели, чтобы она отвечала только числом? Разное пробовал, не получается никак, добавляет кучу текста к ответу.

A

11:58

Artem

In reply to this message

Я в промпте писал "отвечай только цифрой, не добавляй слов и

других символов, только число, например 1.

OpenAI еще боле-менее понимает, с Яндекс ЖПТ сложнее

OpenAI еще боле-менее понимает, с Яндекс ЖПТ сложнее

ВК

AM

5 September 2024

TM

АП

09:29

Александр Пособило

In reply to this message

можно загрузить модель с HF, можно подлючить другой API, например от

nvidia https://build.nvidia.com/explore/discover

AM

ВК

09:32

Владислав Куриченко

In reply to this message

Кажется, пора делать гайд о том, что делать, если кончились токены

TM

AM

TM

АП

09:37

Александр Пособило

In reply to this message

Я вот этот использовал, неплохо работает

!pip install -U --quiet

langchain-nvidia-ai-endpoints

from

langchain_nvidia_ai_endpoints import NVIDIAEmbeddings

from

langchain_nvidia_ai_endpoints import ChatNVIDIA

from getpass

import getpass

from langchain_nvidia_ai_endpoints import

NVIDIAEmbeddings

from langchain_nvidia_ai_endpoints import

ChatNVIDIA

ChatNVIDIA.get_available_models()

NVIDIAEmbeddings.get_available_models()

api_key

= getpass(prompt='Введите API ключ')

llm =

ChatNVIDIA(model="meta/llama-3.1-405b-instruct",

nvidia_api_key=api_key

)

embedder =

NVIDIAEmbeddings(model='nvidia/nv-embed-v1',

api_key=api_key

)

TM

R

09:38

RemoveJoinGroupMsgBot by @Bot442

This group uses @RemoveJoinGroupMsgBot

to remove joined group messages. Add the bot to your group to keep the

conversation clean.

(Get an ad-free license to disable future credit message)

(Get an ad-free license to disable future credit message)

АП

09:39

Александр Пособило

In reply to this message

там же на сайте регаешься и тебе токенов на 1000 запросов дадут,

выбираешь модель, и под ней нажимаешь 'get api key'

TM

TM

10:15

Timur Makhmutov

In reply to this message

вроде как работает, да, но очень медленно и качество заметно похуже

10:16

In reply to this message

и лонгчейн с агентами токенов выжигают только в путь)) Это как-то можно

оптимизировать?)

АП

10:18

Александр Пособило

In reply to this message

!pip install langchain tiktoken -qты эту бибилиотеку скачал? от нее скорость существенно зависит. Ну и модель хуже справляется, это да, но я в пример обученную под instruct а не под chat привел, думаю, можно найти получше

TM

АП

TM

L

10:43

LLM Course | Chat

In reply to this message

Если у вас закончились токены курса, пишите @tenishevnikita.

Можно за 500₽ докупить ещё 1М токенов.

Либо перейти на опенсорс модели. Большинство задач, наверное кроме агентов, можно решить с помощью них.

Так же для экономии токенов, стоит отлаживать решения не на всем датасете задачи, а на нескольких примерах.

Можно за 500₽ докупить ещё 1М токенов.

Либо перейти на опенсорс модели. Большинство задач, наверное кроме агентов, можно решить с помощью них.

Так же для экономии токенов, стоит отлаживать решения не на всем датасете задачи, а на нескольких примерах.

LLM Course | Chat pinned this message

6 September 2024

VF

09:20

Vasiliy Fadeev

In reply to this message

Привет. В задании про агента, отвечающего на вопросы, я подключил

несколько инструментов, но всегда первым при вызове отрабатывает

инструмент human.

как можно управлять приоритетами агента?

> Entering new AgentExecutor chain...

I should first check if the fact is true or false.

Action: human

Action Input: Is the fact that humans only use 10% of their brain true or false?

Is the fact that humans only use 10% of their brain true or false?

сделай сам

Observation: сделай сам

Thought: I should try another tool since the human was not able to provide an answer.

Action: wikipedia

Action Input: "10% of brain myth"

Observation: Page: Ten percent of the brain myth

Summary: The ten percent of the brain myth or 90% of the brain myth states that humans generally use only one-tenth (or some other small fraction) of their brains. It has been misattributed to many famous scientists and historical figures, notably Albert Einstein. By extrapolation, it is suggested that a person may 'harness' or 'unlock' this unused potential and increase their intelligence.

как можно управлять приоритетами агента?

> Entering new AgentExecutor chain...

I should first check if the fact is true or false.

Action: human

Action Input: Is the fact that humans only use 10% of their brain true or false?

Is the fact that humans only use 10% of their brain true or false?

сделай сам

Observation: сделай сам

Thought: I should try another tool since the human was not able to provide an answer.

Action: wikipedia

Action Input: "10% of brain myth"

Observation: Page: Ten percent of the brain myth

Summary: The ten percent of the brain myth or 90% of the brain myth states that humans generally use only one-tenth (or some other small fraction) of their brains. It has been misattributed to many famous scientists and historical figures, notably Albert Einstein. By extrapolation, it is suggested that a person may 'harness' or 'unlock' this unused potential and increase their intelligence.

M

09:30

Mat

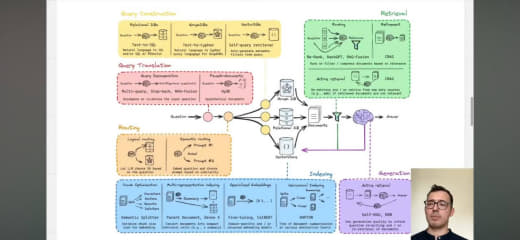

In reply to this message

Всем привет! Нашел вот такую схему в интернете по улучшению RAG

пайплайна. Подскажите, может кто-то знает/видел техническую реализацию

в коде таких элементов? Буду благодарен)

ВК

10:12

Владислав Куриченко

In reply to this message

Да уж, задание по агентам очень много токенов ест…

VF

10:22

Vasiliy Fadeev

In reply to this message

в среднем, 0,1-0,12$ за прогон файла. у Вас получилось задание с

вопросами?

ВК

10:36

Владислав Куриченко

In reply to this message

Нет, почему-то модель то выдает цифры как надо, то словари, то вообще

не хочет считать промежуточные вычисления и функции падают (агент

подает в функцию словарь)

10:37

In reply to this message

У меня уже 200 тысяч токенов ушло на задание, из выделенных 1 млн на

весь курс

VF

10:37

Vasiliy Fadeev

In reply to this message

та же проблема была. починил промптом и сверху навернул парсер

ВК

VF

10:39

In reply to this message

нет, просто функцию парсинга написал.

штатный парсер, который в примерах (ResponseSchema), я не понимаю, как это работает

штатный парсер, который в примерах (ResponseSchema), я не понимаю, как это работает

ZR

10:41

Zalina Rusinova

In reply to this message

у LlamaIndex есть много элементов реализованных. Есть сомнения, что это

все надо одновременно использовать 😜

L

VF

10:43

Vasiliy Fadeev

In reply to this message

я на следующем задании застрял:

1. обрываются соединения

2. 5 итераций не хватает на поиск ответа

3. встроенный парсер модуля wikipedia ошибку вызывает на некоторых примерах

4. с приоритетам не могу понять, что делать - агент постоянно меня спрашивает вместо того, чтобы искать в источниках

5. как только пишешь полноценный промпт в задачу агенту - с api приходи превышение по токенам

1. обрываются соединения

2. 5 итераций не хватает на поиск ответа

3. встроенный парсер модуля wikipedia ошибку вызывает на некоторых примерах

4. с приоритетам не могу понять, что делать - агент постоянно меня спрашивает вместо того, чтобы искать в источниках

5. как только пишешь полноценный промпт в задачу агенту - с api приходи превышение по токенам

ZR

10:43

Zalina Rusinova

In reply to this message

у llamaindex тоже есть возможность индексироваться с помощью графов

знаний. правда нормально работает это только с gpt4

M

10:44

Mat

In reply to this message

Если я правильно понимаю, надо собрать правильную комбинацию этих

подходов

ZR

10:44

Zalina Rusinova

In reply to this message

тоже только с gpt4. там проблема на этапе создания запросов в neo4j

ВК

10:44

Владислав Куриченко

In reply to this message

Ага, я тоже в итоге хотел сделать парсинг, но проблема еще в том, что

функции которые обернуты в tool просто так не хотят вызываться, либо я

пока не нашел метод нужный

M

ZR

10:44

Zalina Rusinova

In reply to this message

ну да.. выбрать то, что работает именно на твоей задаче

DA

10:45

Dolganov Anton

In reply to this message

Хм

Т.е. Мне еще повезло закончить курс за 500 000 токенов

)

Т.е. Мне еще повезло закончить курс за 500 000 токенов

)

ZR

10:45

Zalina Rusinova

In reply to this message

там мало техник реализовано для advanced rag. Проще сразу с llamaindex

ВК

10:45

Владислав Куриченко

In reply to this message

Видимо, все с первого раза удавалось делать правильно

DA

VF

10:45

Vasiliy Fadeev

In reply to this message

отладка в парсер через tools входит, но результат не соответствуют

ожиданиям

M

ZR

M

DA

10:46

Dolganov Anton

In reply to this message

Теория заговора

Все эти агенты это скам от создателей LLM

Развод на токены

)

Все эти агенты это скам от создателей LLM

Развод на токены

)

VF

10:47

Vasiliy Fadeev

In reply to this message

Раз Вы успешно закончили, может опытом поделитесь по вопросам выше?

M

ZR

KA

10:57

Konstantin Altukhov

In reply to this message

привет

истек срок действия ключа, можете продлить?

истек срок действия ключа, можете продлить?

M

NT

DA

12:59

Dolganov Anton

In reply to this message

Ух

Точно уже не вспомню (старость), но как я помню именно в последних заданиях ближе к агентам я меньше проявлял "самодеятельности"

Больше "повтори как было в примерах"

Возможно в зависимости от задания по колхозному делал склейку промпта из датафрейма с уточняющими инструкциями (вида "давай короткий ответ" / "отвечай только да/нет")

По поводу итераций

Насколько я помню в среднем срабатывало с 3/4 итерации

Но была "дисперсия" - в том плане что от запуска к запуску оно могло выдавать разные ответы

Точно уже не вспомню (старость), но как я помню именно в последних заданиях ближе к агентам я меньше проявлял "самодеятельности"

Больше "повтори как было в примерах"

Возможно в зависимости от задания по колхозному делал склейку промпта из датафрейма с уточняющими инструкциями (вида "давай короткий ответ" / "отвечай только да/нет")

По поводу итераций

Насколько я помню в среднем срабатывало с 3/4 итерации

Но была "дисперсия" - в том плане что от запуска к запуску оно могло выдавать разные ответы

VF

DA

VF

L

VF

13:13

In reply to this message

Привет. В задании про агента, отвечающего на вопросы, я подключил

несколько инструментов, но всегда первым при вызове отрабатывает

инструмент human.

как можно управлять приоритетами агента?

> Entering new AgentExecutor chain...

I should first check if the fact is true or false.

Action: human

Action Input: Is the fact that humans only use 10% of their brain true or false?

Is the fact that humans only use 10% of their brain true or false?

сделай сам

Observation: сделай сам

Thought: I should try another tool since the human was not able to provide an answer.

Action: wikipedia

Action Input: "10% of brain myth"

Observation: Page: Ten percent of the brain myth

Summary: The ten percent of the brain myth or 90% of the brain myth states that humans generally use only one-tenth (or some other small fraction) of their brains. It has been misattributed to many famous scientists and historical figures, notably Albert Einstein. By extrapolation, it is suggested that a person may 'harness' or 'unlock' this unused potential and increase their intelligence.

как можно управлять приоритетами агента?

> Entering new AgentExecutor chain...

I should first check if the fact is true or false.

Action: human

Action Input: Is the fact that humans only use 10% of their brain true or false?

Is the fact that humans only use 10% of their brain true or false?

сделай сам

Observation: сделай сам

Thought: I should try another tool since the human was not able to provide an answer.

Action: wikipedia

Action Input: "10% of brain myth"

Observation: Page: Ten percent of the brain myth

Summary: The ten percent of the brain myth or 90% of the brain myth states that humans generally use only one-tenth (or some other small fraction) of their brains. It has been misattributed to many famous scientists and historical figures, notably Albert Einstein. By extrapolation, it is suggested that a person may 'harness' or 'unlock' this unused potential and increase their intelligence.

13:19

In reply to this message

Вопрос: Одного из преподавателей этого курса зовут Валера

Бабушкин.

Ответ: False

C:\Users\vasil\AppData\Roaming\Python\Python311\site-packages\wikipedia\wikipedia.py:389: GuessedAtParserWarning: No parser was explicitly specified, so I'm using the best available HTML parser for this system ("lxml"). This usually isn't a problem, but if you run this code on another system, or in a different virtual environment, it may use a different parser and behave differently.

The code that caused this warning is on line 389 of the file C:\Users\vasil\AppData\Roaming\Python\Python311\site-packages\wikipedia\wikipedia.py. To get rid of this warning, pass the additional argument 'features="lxml"' to the BeautifulSoup constructor.

lis = BeautifulSoup(html).find_all('li')

Вопрос: Пушкин родился в 1837 году в Нью-Йорке.

Ответ: False

Ответ: False

C:\Users\vasil\AppData\Roaming\Python\Python311\site-packages\wikipedia\wikipedia.py:389: GuessedAtParserWarning: No parser was explicitly specified, so I'm using the best available HTML parser for this system ("lxml"). This usually isn't a problem, but if you run this code on another system, or in a different virtual environment, it may use a different parser and behave differently.

The code that caused this warning is on line 389 of the file C:\Users\vasil\AppData\Roaming\Python\Python311\site-packages\wikipedia\wikipedia.py. To get rid of this warning, pass the additional argument 'features="lxml"' to the BeautifulSoup constructor.

lis = BeautifulSoup(html).find_all('li')

Вопрос: Пушкин родился в 1837 году в Нью-Йорке.

Ответ: False

L

13:22

LLM Course | Chat

In reply to this message

Пока такой совет: так как задача повышенной сложности, можно сейчас её

пропустить и двигаться дальше. Там будет ещё один урок по агентам -

возможно, станет более ясно как её решить.

AK

13:32

Artem Kotlov

In reply to this message

Добрый день. Застопорился на задаче 3.2 "Кажется, это что-то на

LLM-ском?" файл получил, но судя по всему, что то не так с

разделителем или форматом данных (скорее всего с полем text) в чем

именно проблема понять не могу, могу направить результирующий файл.

L

AK

L

L

14:06

LLM Course | Chat

?

🤖 AI Practice | LLM | ChatGPT | GenAI 06.09.2024

12:44:11

In reply to this

message

От нового проекта у всей команды курса свело олдскулы!

🤯😇

Встречайте, проект от Владимира Руденко: ТГ-бот по вселенной HMM3 (@heroes_game_bot). 🔥

Энциклопедия по игре, плюс GPT-генерация новых героев и персонажей по заданным характеристикам. (за генерацию отвечает YandexGPT)

Оригинальная идея и крутая реализация!

Делитесь в комментариях за какой замок любите играть и как вам этот проект?

Встречайте, проект от Владимира Руденко: ТГ-бот по вселенной HMM3 (@heroes_game_bot). 🔥